English is known to be an Internet’s number one language. There are tons of annotated datasets and different packages available online, such as excellent NLTK, simple TextBlob or the fastest syntactic parser spaCy. In fact, part of speech tagging of English sentences is today considered a closed issue. During the past 11 years, it improved by just 1.04 % - to a high accuracy of 97.50% (more in articles by Agić et al. (2013) and Mannig et al. (2011)). Also, named entity recognition (or identifying words and group of words as concepts such as persons, locations, organizations, objects, etc.) is typically over 90% accurate, while automatic text summarization achieves accuracy over 88%. Despite all that, we cannot say that processing of English sentences always runs smoothly and painlessly, for sure it has its problems. But you can handle these problems quickly as there is a large community handling the same problems and sharing results and experience.

On the contrary, when you are processing a language with richer morphology and more flexible word order, you need more pre-processing, a bit more creativity and much more time to achieve the same significant accuracy as for the English language. To illustrate problems with such language, we’ll take a look at the Croatian language (but the same approach can be used for any other languages – Slovak, Finish, Turkish, etc). Since it is less widely spoken (in comparison to English, German, Spanish or French), it’s not practical to develop a new text processing tool for each of its language varieties. It would take too much time and too much data. Instead, it is better to find a way to standardize all the words in the observing text.

Croatian is a highly inflective language which means that pronouns, nouns, adjectives and even some numerals incline, while verbs conjugate for a person and tense. Verbs can be perfective (completed action) or imperfective (incomplete or repetitive action) with different prefixes. It is a linguistically under-resourced language with just a few annotated databases available online. So, in most cases, you are expected to annotate tens of thousands of data examples to create useful text processing neural network!

In addition, everyday online communication increases the difficulty of language processing. People tend to use colloquialisms or abbreviations and foreign words. They don’t follow grammatical or orthographical norms and don’t bother to correct misspellings. For instance, in tweets or social network comments, people often omit diacritics. If you try to read such text, you wouldn’t have any problem and you could easily understand it; but for the computer, such text becomes a problem to process. It detects these “new” words as ambiguous or unknown forms and it cannot automatically link them to their diacritic form.

For example, in Croatian, č and ć become c, š becomes s, ž becomes z, and đ becomes d or dj, and people even use substitutes like ch or sh for č and š. The biggest issue with this is that without diacritics words of different meaning become equal, like kos (blackbird) and koš (basket), zao (evil) and žao (sorry) or voda (water) and vođa (leader). The wrong meaning of one word in the sentence can totally change its’ sentiment. Thus, one of the good pre-processing steps is a diacritic restoration which can be done by letter- or word-level approach.

The word-level approach gives you better results but requires a large lexicon which usually doesn’t cover non-standard word forms. For Croatian, there is a great diacritic restoration tool ReLDI with an available article describing the word-level approach. There are also available Slovakian diaqres and Vietnamese restore-tonemark. Asahiah and his associates from the University of Cambridge created an excellent survey of diacritic restoration in abjad and alphabet writing systems. Authors explained different approaches for different languages and listed past achievements, thus this survey is a nice starting point.

Example of diacritic restoration by ReLDI. All diacritics are restored and the word sto (hundred) is now što (what) which is a real meaning of the word.

from reldi.restorer import DiacriticRestorer

dr = DiacriticRestorer('hr')

dr.authorize('my_username','my_password')

sentence = "Osim sto rjesava zlocine, inspektor Skrga je istovremeno zaljubljen u dvije djevojke – vlasnicu kafica Biserku i fatalnu zavodnicu, barsku pjevacicu Andelu."

dr.restore(sentence)

Output:

"Osim što rješava zločine, inspektor Škrga je istovremeno zaljubljen u dvije djevojke – vlasnicu kafića Biserku i fatalnu zavodnicu, barsku pjevačicu Anđelu."

Another useful preprocessing step is stemming. As explained in our previous post, stemming removes words’ suffixes. You can create your own stemmer following standard grammatical rules defined by your language with a use of regular expressions, e.q. with python package re (see the example below). With a list of such rules, you can accomplish better accuracy as different forms of the same word became comparable and similar.

Here is the example of stemming with the package re for Croatian. We are removing all suffixes like jući or smo from the verbs whose stem ends with ava, eva, iva or uva. So, this is a customized rule for creating stems from verbs like spavati, pjevati, plivati or čuvati. As you can see different forms of verb rješavati (solving) are becoming the same with this short algorithm.

import re

stem, suffix = ".+(e|a|i|u)va jući|smo|ste|jmo|jte|ju|la|le|li|lo|mo|na|ne|ni|no|te|ti|še|hu|h|j|m|n|o|t|v|š| ".decode('utf8').strip().split(' ')

rule = re.compile(r'^('+stem+')('+suffix+r')$')

words = ["rješavah", "rješavajući", "rješavasmo", "rješavao", "spavala", "pjevaj", "čuvajmo", "plivajte"]

stem = [(rule.match(word.decode('utf8'))).group(1) for word in words]

print(stem)

Output:

["rješava", "rješava", "rješava", "rješava", "spava", "pjeva", "čuva", "pliva"]

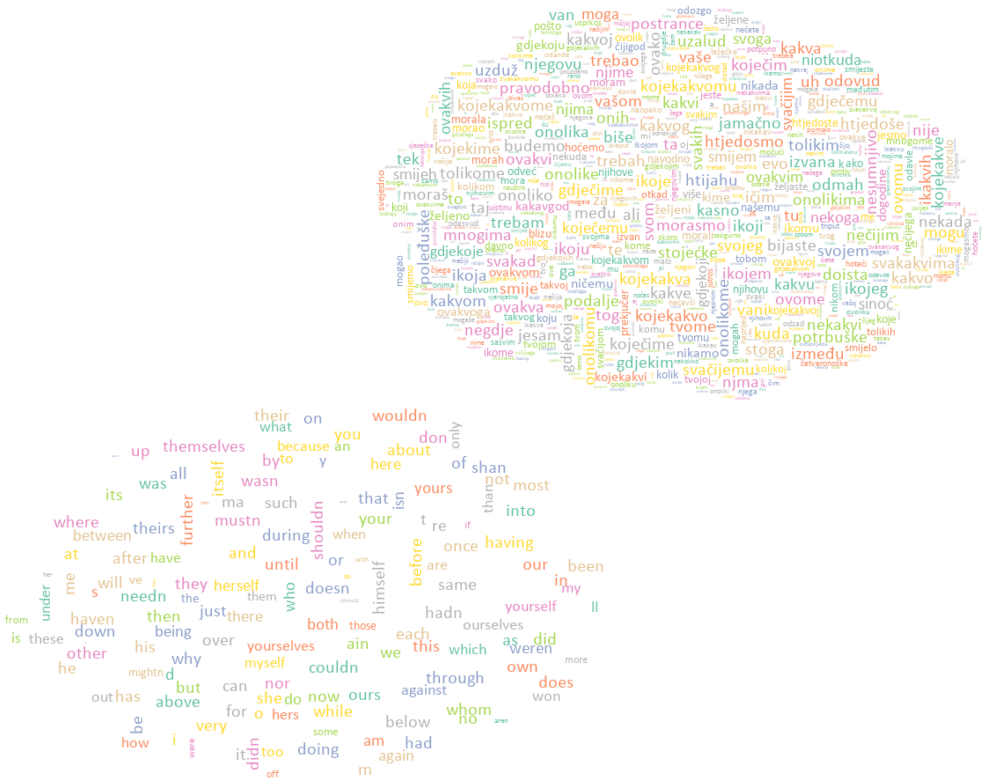

In Croatian, even something simple as a list of stop words can grow into huge dimensions if you want to include all diversity and not to stem the dataset. Just to explain, stop words are words that don’t bring any useful information in the processing of natural language and they are usually filtered out before or after the processing. They refer to the most “common” words like I, her, by, about, here, etc. Removing such words in the context of sentiment analysis can easily upgrade your accuracy by 5%!

Example of wordclouds for stopwords in English and Croatian. Cloud sizes are the same but the number of Croatian stop words (or cloud density) exceeds the number of English stopwords by several times.

You can also create lemmatization algorithm and connect different words of same meaning, although it’s way more complex and time-consuming than straightforward stemming. It is applicable in the whole range of procedures — from simple text-search up to complex parsing. There are two dominant approaches for lemmatization: algorithmic (rule-based and realized as FSA, finite state automata) and relational (data-driven and realized with databases). The first one is very complex with a system of rules handling different word forms of the lemma, it is difficult to create but good for unknown words. The second approach works with a list of all possible forms of each lemma, it’s relatively simple but it needs huge vocabulary and still cannot process unknown words. Therefore, before lemmatizing some text, it is really useful to correct spelling and writing errors.

Example of lemmatization for Croatian with ReLDI. Nouns and pronouns were transffered into their singular nominative form, while verbs were replaced by their infinitive form.

from reldi.tagger import Tagger

t = Tagger('hr')

t.authorize('my_username','my_password')

sentence = "Škrgin detektivski nos pomaže mu nanjušiti smrdljive ribe i privesti ih licu pravde."

t.tagLemmatise(sentence)

Output:

["škrga", "detektiv", "nos", "pomoć", "on", "njušiti", "smrad", "riba", "i", "privesti", "oni", "lice", "pravda"]

To sum up: when you are processing an inflectionally rich and less widely spoken language, it is crucial to keep in mind that you’ll probably end up annotating your own data and lose much more time on pre-processing. Restore diacritics and create good stemming or lemmatization algorithm to restrain all the words’ varieties. This can be time-consuming but eventually, it will pay off with higher accuracy.

In the next article, we will go through a step-by-step procedure of creating a text classifier with scikit-learn and ntlk packages.