Recently word “mining” is heard in every corner. The reason is not only the bitcoin hype but also a wave of interest in machine learning tools and instruments to make customer behavior analysis. Text mining is one of such instruments. In the series of following articles, we will share with you the most powerful text mining techniques and identify some of the most used language processing tools. Also, we will clarify common issues with the text processing.

What is “text mining”?

Have you ever posted a review about a new phone you bought or commented about a video game you tried? All data which you and other customers leave online is carefully collected by companies and product owners. It is called “fresh text data” or “unstructured data”. A lot of effort and money is put into the analysis of this unstructured data with the highest possible accuracy as it is a key to the understanding of customers’ behavior. It is here where text mining comes to the stage.

Text mining techniques (like sentiment analysis or concept extraction) extract meaningful information from everyday writing. Text often contain slang, sarcasm, inconsistent syntax, grammatical errors, double entendres, etc. Those create a problem for the machine algorithms as all text mining techniques require correctly structured text sentences or documents. So, if you still want to implement an algorithm, someone needs to correct all writing errors and standardise word forms to make them more comparable. The best way to do that is with natural language processing tools.

Natural language processing tools (NLP) help computers to successfully process large natural language corpora. In some way they teach computers how to read. There is a thin line between NLP and text mining and most people consider them synonyms, but there is a difference. While text mining discovers relevant information in the text by transforming it into data, the natural language processing transforms text with the use of linguistic analysis. Its’ ultimate goal is to accomplish human-like language processing.

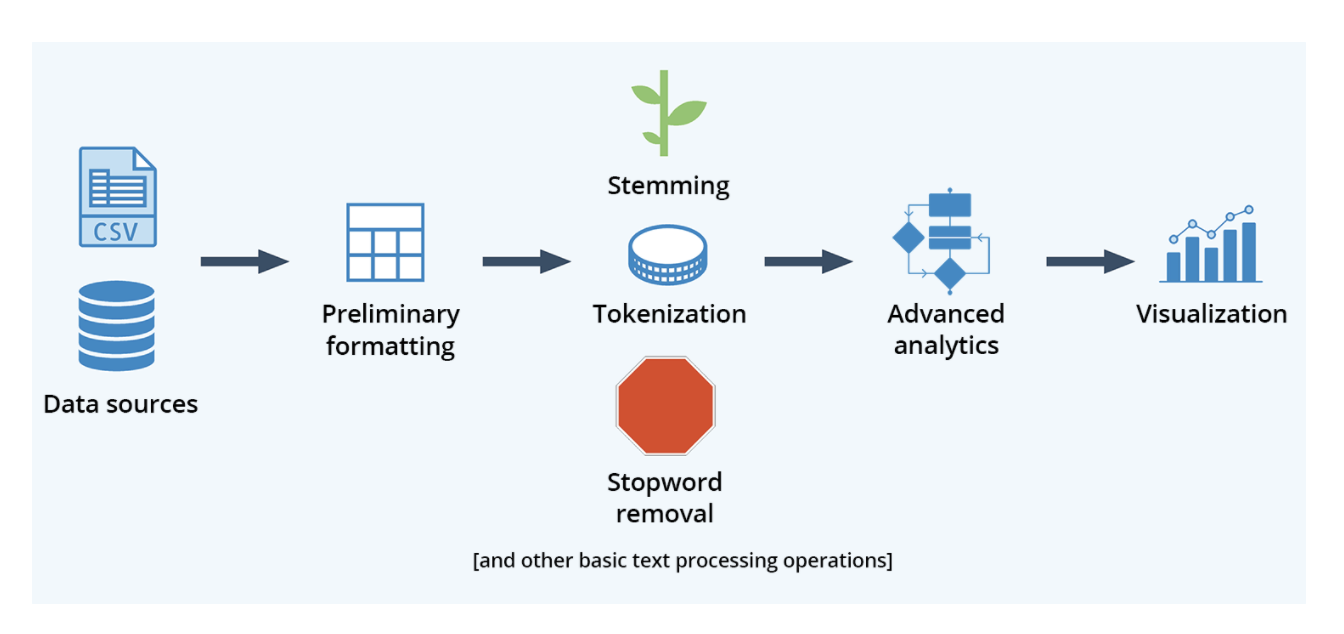

Text mining and NLP are commonly used together because one can get a better result if the text is somehow pre-processed and structured. For Python, there is an excellent NLP implementation: nltk package. You can use other packages or even better - implement your own algorithm! Below we will explain some of the most helpful morphology and syntax tools and give examples of how to use ntlk.

NLP tools for morphology and syntax processing

Sentence boundary detection (or sentence tokenizing) – finds breaks in given text. You may ask why this is needed when sentences are usually separated by punctuation marks (like “.”, “!” or “?”), but the problem appears when the punctuation is used to mark abbreviation or compound words (like “quick-thinking” or “hurly-burly”).

Example of sentence tokenizing with nltk:

from nltk import sent_tokenize

sentence = "Prof. Balthazar is a nice scientist who always solves the problems of his fellow-citizens by means of a magic machine."

print( sent_tokenize(sentence))

Output:

['Prof. Balthazar is a nice scientist who always solves the problems of his fellow-citizens by means of a magic machine.']

In this sentence, “Prof.” would be considered as an independent sentence if you separate text by a dot. To avoid this issue you can use nltk.sent_tokenize() which classifies the whole example as one sentence. This function is available in 17 European languages, but sadly not in Croatian (and what to do in case of Croatian language or another non-available language, we will cover in our next article).

Word segmentation (or word tokenizing) – separates continues text into independent words. In most western languages (such as English, Croatian or French) words are separated by space. But for languages like Chinese or Japanese where words aren’t delimited, it can be quite a problem!

Example of word tokenizing with nltk: “He solves problems with his inventions and his hurly-burlytron machine that drips ideas”. With the help of nltk, we can receive list of all words in the sentence including complex double words.

from nltk import word_tokenize

text = "He solves problems with his inventions and his hurly-burlytron machine that drips ideas."

print(word_tokenize(text))

Output:

['He', 'solves', 'problems', 'with', 'his', 'inventions', 'and', 'his', 'hurly-burlytron', 'machine', 'that', 'drips', 'ideas', '.']

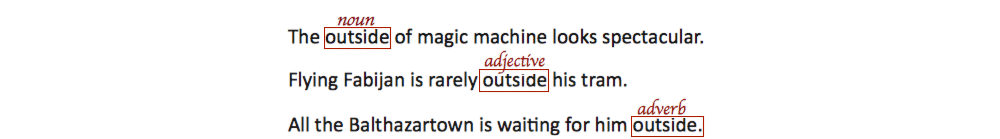

Part-of-speech tagging (POS) (or grammatical tagging) is used to determine a class of each word, i.e. to identify the word as a noun, verb, adjective or adverb, etc. Many words can be used as multiple parts of speech, like “outside” in the example below. Further, languages differ in the distinction of word classes and those less inflectional (like English) have more ambiguity. There are even some Polynesian languages that lack the distinction between nouns and verbs.

Example: depending on the context a word “outside” can be a noun, adjective or adverb.

The same example processed with nltk:

import nltk

text = word_tokenize("The outside of magic machine looks spectacular.")

nltk.pos_tag(text)

text2 = word_tokenize("Flying Fabijan is rarely outside of his tram.")

nltk.pos_tag(text2)

text3 = word_tokenize("All the Balthazartown is waiting for him outside.")

nltk.pos_tag(text3)

Output:

[('The', 'DT'),

('outside', 'NN'),

('of', 'IN'),

('magic', 'JJ'),

('machine', 'NN'),

('looks', 'VBZ'),

('spectacular', 'JJ'),

('.', '.')]

[('Flying', 'VBG'),

('Fabijan', 'NNP'),

('is', 'VBZ'),

('rarely', 'RB'),

('outside', 'JJ'),

('of', 'IN'),

('his', 'PRP$'),

('tram', 'NN'),

('.', '.')]

[('All', 'PDT'),

('the', 'DT'),

('Balthazartown', 'NNP'),

('is', 'VBZ'),

('waiting', 'VBG'),

('for', 'IN'),

('him', 'PRP'),

('outside', 'RB'),

('.', '.')]

Stemming – is a process of reducing inflected words to their word stem, i.e root. It doesn’t have to be morphological; you can just chop off the words’ ends. For example, word “solv” is the stem of words “solve” and “solved”.

The most used algorithms are Porter’s algorithm (just English), its improvement Snowball (supports other languages like Arabian, Spanish and German) and Lancaster’s(very aggressive English stemmer ).

Fun-fact: The creator Martin Porter named his algorithm a Snowball as a tribute to SNOBOL, an excellent string handling language of Messrs Farber, Griswold, Poage and Polonsky from the 1960s, but initially he wanted to call it “strippergram” because it provides a ‘suffix STRIPPER GRAMmar’.

Example of how to use the most popular stemmers with nltk for the word “agreement”. You can see that Lancaster’s algorithm is the most aggressive:

from nltk.stem.porter import PorterStemmer

from nltk.stem.lancaster import LancasterStemmer

from nltk.stem import SnowballStemmer

porter = PorterStemmer()

lancaster = LancasterStemmer()

snowball = SnowballStemmer("english")

print(porter.stem("agreement"))

print(lancaster.stem("agreement"))

print(snowball.stem("agreement"))

Output:

agreement

agr

agreement

In Kraken, we created our own stemmer for the Croatian language based on its grammatical rules. In a context of sentiment analysis (in our case this were Facebook comments), this step was crucial for transforming words in more similar forms. When you are creating a stemmer, you should be careful not to over-stem or under-stem the word as you can cut off all letters except first one or leave too many and have the multiple forms of the same word (see the example below).

Example of over-stemming and under-stemming with Porter’s algorithm for the words “organization” and “absorption”. Over-stemming reduces words of different meaning on the same root, while under-stemming leaves words of same meaning different:

# Over-stemming

print(porter.stem("organization"))

print(porter.stem("organs"))

# Under-stemming

print(porter.stem("absorption"))

print(porter.stem("absorbing"))

Output:

organ

organ

absorpt

absorb

Lemmatization – similarly to stemming, lemmatization reduces words to their lemmas (dictionary form) but does it with a use of vocabulary and morphological analysis. It is much more complex and time-consuming as it has to inspect words’ context. So, most of the time it is enough to use stemming, but if needed (for example in cases of building text vocabulary), nltk provides WordNet lemmatizer based on large WordNet dictionary of the English words.

Example of difference between WordNet lemmatizer and the Snowball stemmer; you can see that lemmatizer uses vocabulary:

from nltk.stem import WordNetLemmatizer

wnl = WordNetLemmatizer()

print(wnl.lemmatize("libraries"))

print(snowball.stem("libraries"))

Output:

library

librari

Terminology extraction – extracts the crucial term in the given sentence or text. An essential step in something like this is POS tagging because words get different importance scoring depending on their part of speech. For English, there are some great python implementations like packages rake-nltk (RAKE or Rapid Automatic Keyword Extraction algorithm) and kea (KEA or Keyphrase extraction algorithm).

As mentioned, the combination of text mining and NLP makes it possible to extract valuable information from a non-structured plain text. Today, companies use them for monitoring social media (see our previous post) in order to understand the users’ emotion or perception related to some topic.

In our next article, we will discuss the problems of processing Croatian or other languages for which these basic NLP tools are not available in a nltk package.