Human language is complex and it’s constantly changing. Teaching a machine to analyze various grammatical nuances, cultural variations, slang and misspellings that occur in online mentions is a difficult process. But teaching it to understand how context can affect tone is even more difficult! In our last article, we explained different types of sentiments analysis, and now, we’ll focus on problems!

Sentiment analysis nowadays is normally around 85% accurate, while the degree of agreement between humans is around 80%, so can it really go much higher? We all have different perspectives and we experience the same sentences differently. Besides that, there are other problems that computers have to overcome in order to understand some text, like negations, sarcasm or jokes. We’ll try to explain them and bring you some insights on how to bypass them, where possible.

“Sentiment analysis requires a deep understanding of the explicit and implicit, regular and irregular, and syntactic and semantic language rules.” (Cambria et al., 2013)

Negations

If you are using a classical “Bag of words” model, you have a problem because it doesn’t work well with negations (model is explained in our previous article). Considering that word order isn’t important, the model doesn’t recognize the opposite meaning between sentences like “I like this chocolate” and “I do not like this chocolate”. It would probably give them the same score, marking the words “like” and “chocolate” as positive. To bypass this problem, you can stick negation to the following verb (e.g. notlike) and treat it as a new word. Before that, it is good to restore all abbreviated negations into their longer versions (like: don’t to do not).

Irony, Sarcasm, Metaphors, Jokes

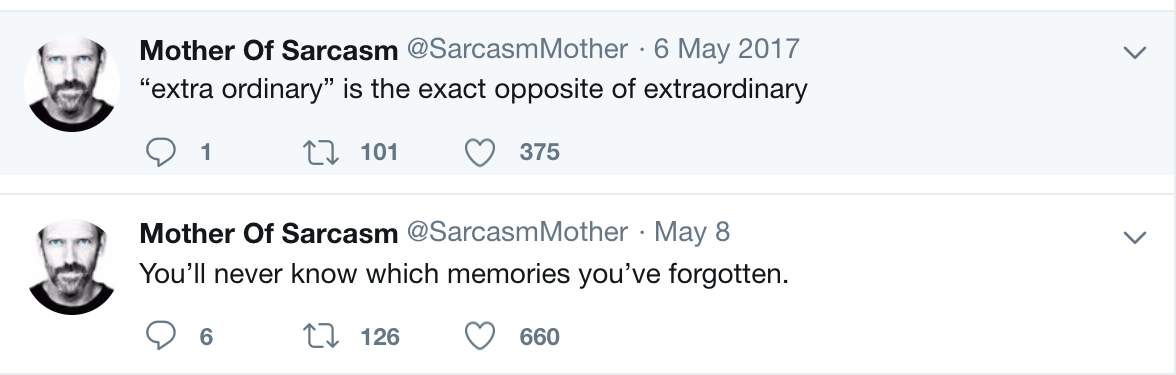

Well, computers don’t have a sense of humor and are not able to understand figurative language. Most of the times, even people aren’t really sure what was intended. Humour as a phenomenon is still undefined, we cannot generalize or formalize something that makes people laugh. Sarcasm or irony usually changes a positive literal meaning of word into something negative, but how can we be sure that a person didn’t literally mean what he or she wrote? That is why we can say that it is impossible to create uniform detector which will work well with irony, sarcasm, metaphors, and jokes without good context investigation and even then it doesn’t work great.

Subjectivity and tone

As stated before, objective texts don’t contain explicit sentiment, but it can implicate one. Let’s say we have next two sentences: “The weather outside is nice” and “The weather outside is sunny”. Most of the people would say that the first one is positive and second neutral, right? So, we have to treat predicates (adjectives, verbs, and some nouns) differently depending on how they create sentiment. In upper example, “nice” is more subjective than “sunny”. So, if there is a possibility, try to detect subjectivity of each word.

Comparison

Opinion can be direct or comparative. The direct opinion gives a straightforward impression of some entity, while comparative sentiment can be quite challenging to detect. Here are some examples:

This product is second to none.

This one is better than the old one.

This one is better than nothing.

How would you classify these examples? Probably: first as negative, second as positive and third as neutral. Let’s say we have a bit more context and that old product is useless. Now, the second is almost the same as the third one, so is it neutral? Context can make a difference. You can get it from the explicit conversation, user attitude or overall set of recent posts/tweets about a topic.

Emojis

According to Guibon et al. there two types of emojis: Western (one-character long or combination of a couple of them, rotated sideways, e.g. :-O) and Eastern (longer combination of characters, vertical, e.g. ¯ \ _ (ツ) _ / ¯). Emojis have quite an important role in the sentiment of text, especially in tweets and social network comments. When you are processing tweets, besides word-level analysis, you should always do a character-level one. Reason for that is that if you transform all emojis into tokens, it will drastically increase the accuracy of your sentiment analyzer. You can use this great list of emojis and their Unicode characters for this kind of pre-processing.

Fun fact: Japanese emoticon are known as kaomoji, literally translated as “face characters”. :-) and (^^) are both smileys. If you take a better look at them: the western one has a smiling mouth, but its Japanese variant doesn’t. Its eyes are expressing joy. In western culture smiling is always done with the mouth while the Japanese tend to silently give a friendly nod, expressing their joy with the eyes. Even in their language, synonym from smiling is Me wo hosomeru - narrowing one’s eyes. This difference isn’t specific just for the smiling, they just focus more on the eyes:

Sad face: Western :-( Japanese (><)

Sorry face: Western :-c Japanese m(_ _)m

Crying face: Western ;-( Japanese (T_T)

Angry face: Western :-@ Japanese (ーー”)

Definition of neutral

This is really important! You have to agree on what is neutral and what is not. The way how you define categories and annotate dataset defines your classifier and you have to be consistent. We recommend tagging next types of sentences as neutral: objective texts (they do not contain explicit sentiment), irrelevant information and text containing wishes (most of them are neutral, like: “I wish chocolate was with hazelnuts.”)

Multiple sentiments in one text

Some texts can be really complex to analyze with different segments containing different sentiment. For instance: “The food was fantastic but the service was terrible”. This sentence contains both negative and positive sentiment, but we cannot say it is neutral. Here, without any context, even we aren’t sure, so how could a computer be?

All these opinion mining problems are still an open issue and the best approach is to tune your algorithm to your problem as good as possible. If you are analyzing tweets or messages, always take emoticons into account. If you are studying political reviews, correlate the polarity with present events. For the product reviews, weigh different product properties because all features aren’t equally important. You cannot solve all the problems, but you can minimize them. Sentiment analysis can benefit and automate some of your work, so why not try to use it?

In our next article, we will teach you how to implement the rule-based systems.