There were numerous times when a manager or a colleague from another department ran into our office and while obviously agitated, asked: “Who’s done this? Who changed the configuration files? Who has overwritten the backups? Client X is now having issues…“ and similar. The template is same.

While our DevOps department is enforcing blameless culture and post-mortems (every department should), there’s still an unresolved issue at hand, a mystery waiting to be demystified. So you connect to server and start troubleshooting, knowing in advance there’s really not so much you can do. ~/.bash_history will not let you know the date and time of command executed, and if someone was paranoid they may have even cleared their history or history of a shared user they’ve substituted into. syslog and auth.log do not have level of verbosity you need to pin point the exact unfortunate time and changes on exact files that affected the whole system.

Still, you might like a good challenge. Someone had really made changes on a production server. They may be easy to fix, but still you wonder about transparency of who’s doing what there. Haven’t even touched security aspect yet, mind you. This is all strictly operational. All of this takes time and focus. Just as if you’d enter a pitch black cave merely with a flashlight. You’re in the dark and illuminate only there where your attention (thus flashlight) goes. And just like having some sort of ultrasonic scanner in a dark cave which would enable you to map it quicker than a flashlight, it would make sense to have a ‘mapped out’ story of what was going inside your own digital cave of infrastructure. It would greatly shorten time to resolution, it could better your mean time to repair (MTTR) and lessen headaches of this toil for you and your colleagues. You could even graph actions that led to an issue/failure/outage.

Tools

Playing detective won’t get you far without the right tools. What may be the tools?

Some form of log aggregation is mandatory. Though, this may be missing the whole idea if there’s literally nothing meaningful to parse out of logs. For example, checking audit logs and finding that only user X has become user Y and that’s the end of the line. What has user Y done after - stays in the dark.

We all have limited time, so I have few factors of consideration for the tools:

- easiness of setup

- effectiveness/flexibility of the tool

- integration with log aggregation and visualisation tools

Stat

stat is a nice tool when checking out file statistics, but there’s still ways to bypass its truthfulness. For example, it may show information about time of a file’s last change, but not user that changed it. What I’ve also noticed is that stat shows me virtually the same timestamp:

File: ‘DATABASE_CONNECTION’

Size: 160 Blocks: 8 IO Block: 4096 regular file

Device: ca01h/51713d Inode: 400173 Links: 1

Access: (0644/-rw-r--r--) Uid: ( 1001/userX) Gid: ( 1001/userX)

Access: 2018-07-06 12:05:39.891078615 +0000

Modify: 2018-07-06 12:05:34.183078615 +0000

Change: 2018-07-06 12:05:34.183078615 +0000

Birth: -

When I modify this file as root with Vim, this is new output of stat:

File: ‘DATABASE_CONNECTION’

Size: 161 Blocks: 8 IO Block: 4096 regular file

Device: ca01h/51713d Inode: 400429 Links: 1

Access: (0644/-rw-r--r--) Uid: ( 1001/userX) Gid: ( 1001/userX)

Access: 2018-07-07 11:30:16.099078615 +0000

Modify: 2018-07-07 11:30:16.099078615 +0000

Change: 2018-07-07 11:30:16.103078615 +0000

Birth: -

Check the inode - it’s changed. This means that write and quit operations result in a new file. On the other hand, when a shared user account is used (as is the case with our client), it gets more complicated to pinpoint exact user behind a change. Stat is nice and easy, but it’s lacking information we need.

Linux Audit System

Since Ubuntu is used on clients infrastructure, I chose to play with Linux Audit System, auditd, on an older Ubuntu 14.04 VM.

sudo apt-get install auditd does the trick and with

auditctl -w /apps/testapp/monitored_folder -p war -k testapp

a watch is quickly placed on the monitored_folder directory and it’s being monitored recursively with testapp being a human-friendly key under which one can later search audit logs.

Note

monitored_folder cannot be root directory /

Be mindful that auditd isn’t by itself enabled on system boot, so you need to take care of that.

The log is stored under /var/log/audit/audit.log and one can search it by entering: ausearch -i -k testapp | tail. This command calls the log file and filters only logs in regard to testapp keyname, while the flag -i helps transform numeric entries into text, where possible. And of course, prints only last 10 rows.

----

type=PATH msg=audit(07/07/2018 20:00:52.583:3222) : item=0 name=/apps/testapp/DATABASE_CONNECTION inode=400488 dev=ca:01 mode=file,644 ouid=userX ogid=userX rdev=00:00 nametype=NORMAL

type=CWD msg=audit(07/07/2018 20:00:52.583:3222) : cwd=/root

type=SYSCALL msg=audit(07/07/2018 20:00:52.583:3222) : arch=x86_64 syscall=setxattr success=yes exit=0 a0=0x15d5a10 a1=0x7fe3161f1a7f a2=0x17aa210 a3=0x1c items=1 ppid=32736 pid=542 auid=ubuntu uid=root gid=root euid=root suid=root fsuid=root egid=root sgid=root fsgid=root tty=pts0 ses=2083 comm=vim exe=/usr/bin/vim.basic key=(null)

I can see here that a user root (sudo?), located in /root folder entered the Vim editor and made changes to monitored file. However, auid (Audit User Identity) tells me the which user stands behind all this escapade.

auditd was really easy to install and start and I was able right away to set up rules and test them out without a hassle. It seems powerful enough, but I wanted to see my users from a monitoring perspective without having to ssh into server.

Now, in theory one could pipe this to a Filebeat daemon and push it further to ELK stack. But, someone has probably already thought of this.

Auditbeat

Looking at Elastic team products, there is a new Beat called Auditbeat. Let’s quickly try and spin this one up.

Stack used:

Auditbeat configuration is minimally changed. Only to transfer watch rule that was established for testapp monitoring in auditd:

#========================== Modules configuration =============================

auditbeat.modules:

- module: auditd

audit_rules: |

## Identity changes.

-w /apps/testapp/monitored_folder/ -p wa -k testapp

- module: file_integrity

paths:

- /apps/testapp/*

File integrity module here watches over changes on a file. Okay, all set - run auditbeat check config and if successful, then auditbeat setup --template --dashboards to initialize everything quickly in Kibana.

Open browser, and there we are:

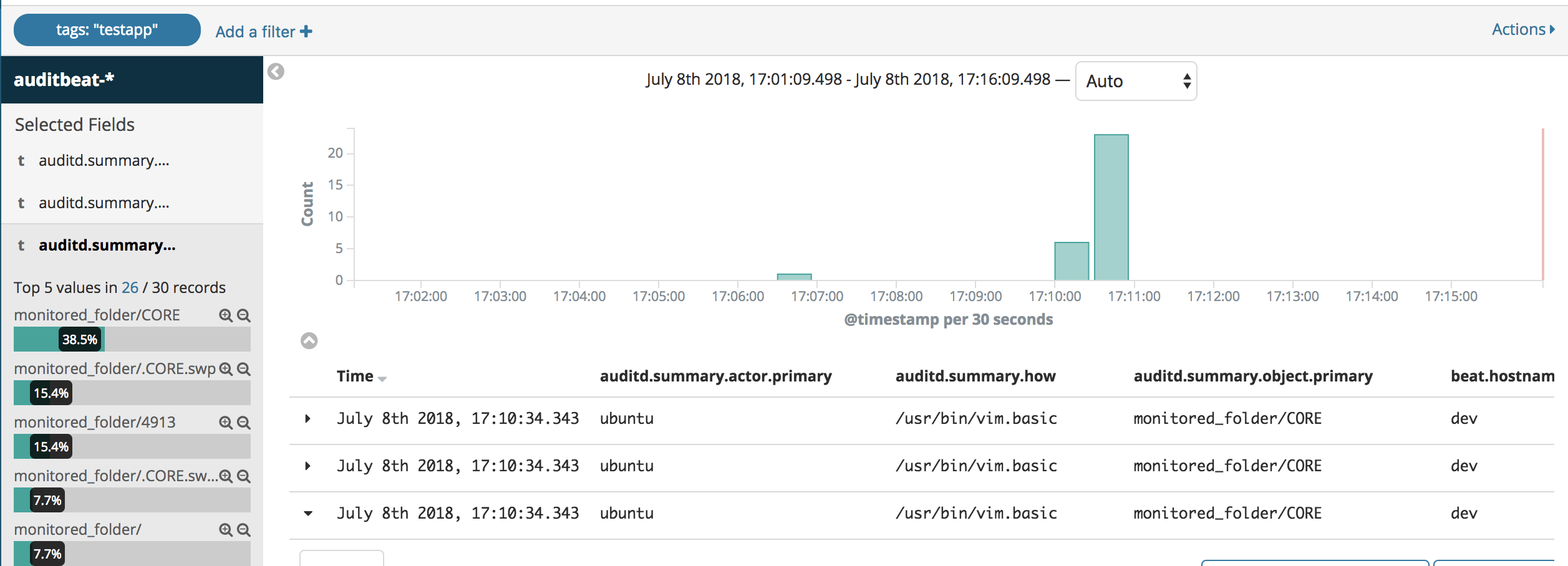

It’s easy to see which logged user, as root has changed a file, at precisely what time, using which editor. One can even see that Vim indeed creates a new file upon saving changes in the editor.

I’ve logged in as another user and done something bad as well. Upon refreshing Kibana:

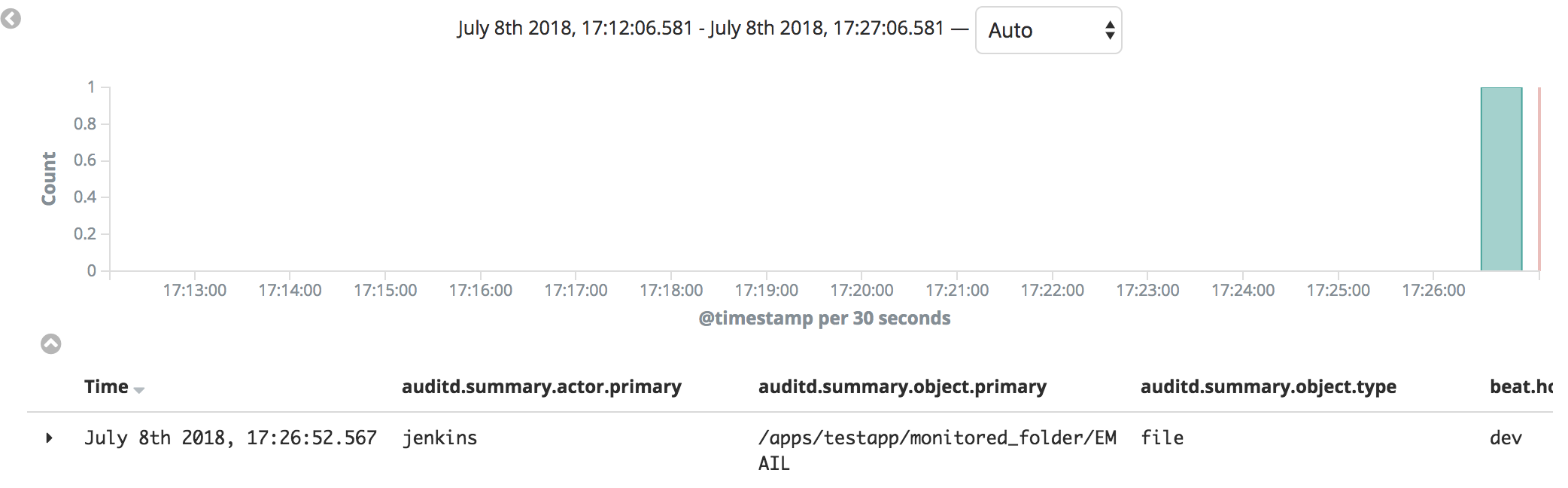

I can now see that user jenkins logged in, substituted user and then deleted EMAIL file. It was kinda diffucult to place everything on a single snapshot, but take my word for it - it showed.

What I loved about Auditbeat is that is checked all of my requirements for this blog post: it was super easy to install (if you have auditd daemon active though, kill it before starting Auditbeat), it integrates so smoothly with the rest of ELK stack we use for monitoring, and it’s versatile (I just scratched the surface here).

Let’s check what else is there.

Go-Audit

Stumbled upon this Medium article. It talks about native GO auditing program which communicates with kernel through netlink is scalable and has good performance. Sounds fantastic!

So I decided to check it out, hands-on:

go get -u github.com/kardianos/govendor

go get -u github.com/spf13/viper

cd go-audit && env GOOS=linux go build

Moved the binary to the server and tried to start it:

Failed to open syslog writer. Error: dial unix /var/run/go-audit.sock: connect: connection refused

Haven’t read the docs well enough; I needed to check Rsyslogd version (min 8.20). The version on server was 7.4.4. I upgraded Rsyslog and installed other dependencies as instructed here.

Then I had to tamper with Rsyslog files, which I’ve done hastily while saving backup configurations.

By now I’ve successfully started go-audit with its example configuration and have checked syslog output:

Jul 26 13:29:17 localhost kernel: [2414218.885961] audit_log_start: 180 callbacks suppressed

Jul 26 13:29:17 localhost kernel: [2414218.885964] audit: audit_backlog=1025 > audit_backlog_limit=1024

Jul 26 13:29:17 localhost kernel: [2414218.885966] audit: audit_lost=17744 audit_rate_limit=0 audit_backlog_limit=1024

Jul 26 13:29:17 localhost kernel: [2414218.910337] audit: audit_backlog=1025 > audit_backlog_limit=1024

Jul 26 13:29:17 localhost kernel: [2414218.910341] audit: audit_lost=17745 audit_rate_limit=0 audit_backlog_limit=1024

Jul 26 13:29:17 localhost kernel: [2414218.938778] audit: audit_backlog=1025 > audit_backlog_limit=1024

Jul 26 13:29:17 localhost kernel: [2414218.938782] audit: audit_lost=17746 audit_rate_limit=0 audit_backlog_limit=1024

Jul 26 13:29:17 localhost kernel: [2414218.955692] audit: audit_backlog=1025 > audit_backlog_limit=1024

Jul 26 13:29:17 localhost kernel: [2414218.955695] audit: audit_lost=17747 audit_rate_limit=0 audit_backlog_limit=1024

Jul 26 13:29:17 localhost kernel: [2414219.097907] audit: audit_backlog=1025 > audit_backlog_limit=1024

Jul 26 13:29:17 localhost kernel: [2414219.097911] audit: audit_lost=17748 audit_rate_limit=0 audit_backlog_limit=1024

This does not reveal much at all, but at least it’s working. Now I wanted to send this audit data to Elasticsearch so I can see it. I’ve dug deeper into documentation and found out that it calls for the whole technology stack. Ok, let’s install Streamstash from the documentation. Installed it, copied .js and upstart config files, tried running it - didn’t run.

Because of technology requirements and set time-limit to pull the whole thing up, I’ve ditched this pursuit. To push the data to Elasticsearch, it needed changes in Rsyslog configuration, Streamstash (NodeJS app) and its custom configuration and it was still throwing errors and refused to run properly… this was just too much hassle to simply get it working.

Conclusion

I’m pleased to have a solution to “Who’s changed that?” question. It could now be put to rest along with manual detective work, at least considering file and system changes.

Linux Audit System was the first one. It did its job well in the past. However, nowadays we need easier, quicker approach to aggregating these events and showing them up quickly, on demand. Auditbeat builds on top of Linux Audit System, integrates smoothly with rest of ELK stack and even with most basic default settings it works right out of the box. It is a well documented project, once you get used to Elastic documentation. Go-Audit took another course, it is probably a good open-source project and it has its documentation written on Github pages. I found it more tedious to get it working, technological dependencies force a person to go out of their way and “digress” multiple times to get the whole thing working and frankly - this project lost me because of it.

In such quickly evolving technological times with more complex machinery and programs at the palm of our hands, I tend to search for simplicity and effectiveness. Software should be reasonably simple to configure and integrate seamlessly for it to do its complex magic.

Another area of interest is auditing of database access and queries run. What type of auditing setup do you use? Let me know in the comments below. ‘Till next time, happy auditing!